Passions

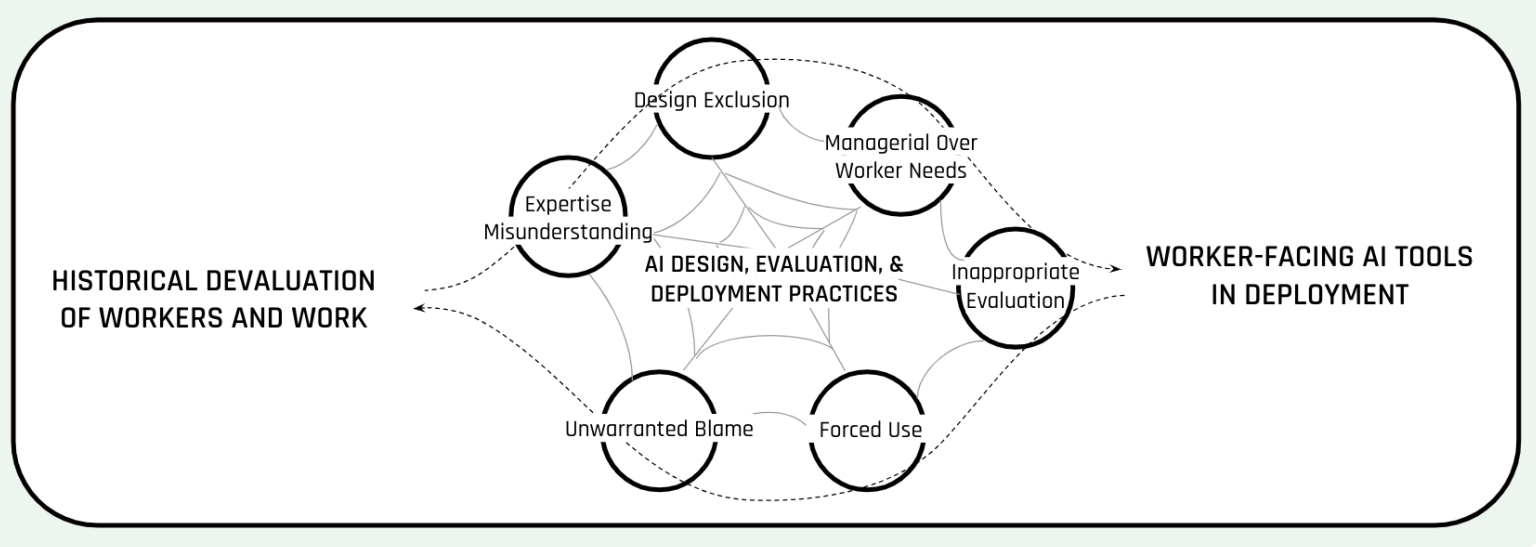

I’m working towards a future of work where decisions around AI design & deployment are made to meaningfully augment worker capabilities and protect worker power as first-order priorities. My PhD research has focused on AI for socially complex work—for example, emotional and care-oriented labor in social work, K12 teaching, and home health—where worker expertise and services provide critical infrastructure for society, yet remain chronically devalued within technology development spaces, organizations, and society more broadly.

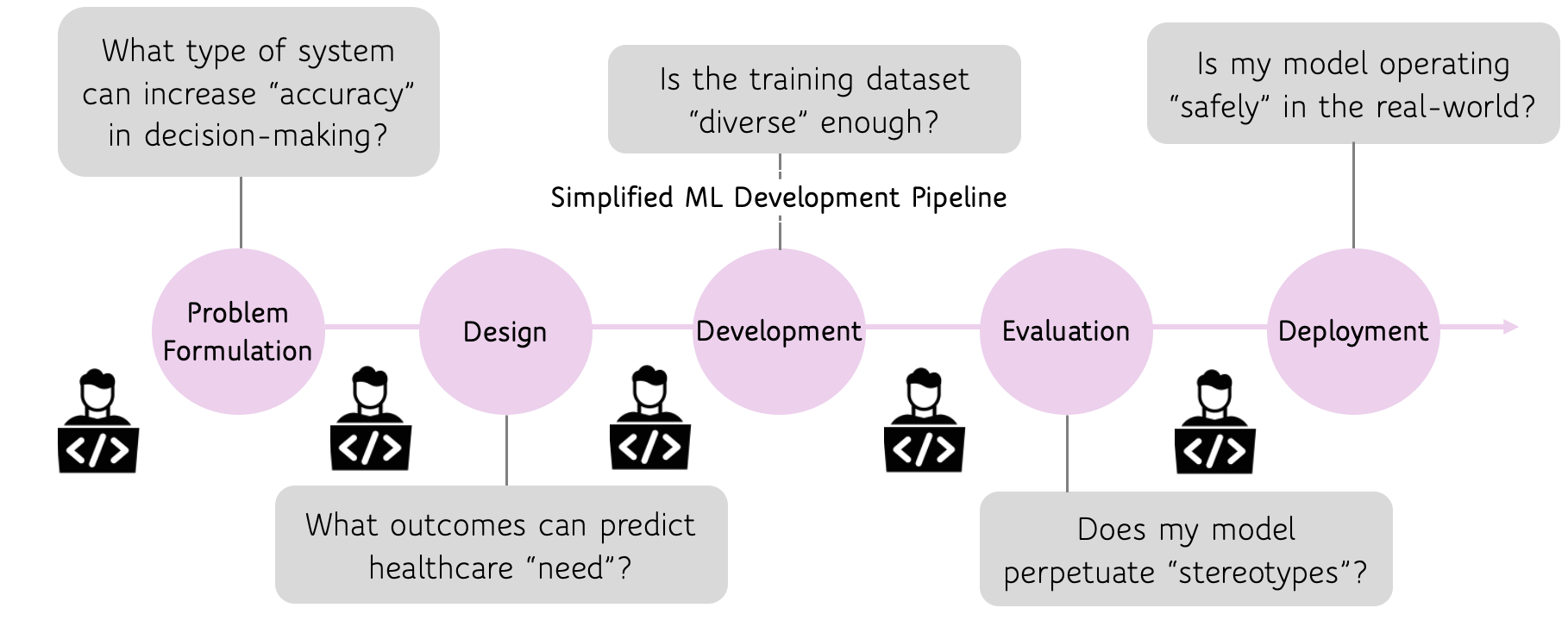

Forming new partnerships with workers, managers, and AI practitioners in these real-world organizational contexts, I use qualitative, quantitative, and design research methods to (1) understand challenges to designing and deploying AI deployments for socially complex work. I take a social ecological approach to understand how worker- (e.g., worker practices, perceptions, training), organization- (e.g., organizational policies, incentives, pressures), and society-level (e.g., systemic issues, public policy) factors collectively shape, and are shaped by, AI design and deployment. I also (2) design novel technical and organizational interventions to address these challenges. My research often explores how best to empower worker participation in shaping decisions along the AI pipeline that would otherwise remain opaque and inaccessible to them.

I publish my research across top-tier HCI (e.g., CHI, CSCW, DIS), Responsible AI (e.g., FAccT, AIES, SaTML), and science and technology studies (Big Data & Society) venues, where it has been recognized with two Best Paper Awards. In addition to doing rigorous research, my research agenda prioritizes extending implications of research to improve real-world AI practice and policy. My research has impacted national- and state-level policy efforts surrounding the use of AI tools for high-stakes decision-making, and has prompted public discourse via media coverage by AP News, NPR, PBS News, and others. My work has been cited in at least two policy documents, and I’ve also published policy-facing writing based on implications of my and others’ research.

Positions

I am a fifth-year PhD candidate in the Human-Computer Interaction Institute at Carnegie Mellon University's School of Computer Science, where I am fortunate to be co-advised by Ken Holstein (CoALA Lab) and Haiyi Zhu (Social AI Lab). I am a NSF GRFP Fellow, K&L Gates Presidential Fellow, and CASMI PhD Fellow, and my work has been generously supported by Google Research, Toyota Research, the National Science Foundation, and the Block Center for Technology and Society. Before starting my PhD, I was at Wellesley College, a historically women's liberal arts college, where I graduated with a BA in Computer Science (with honors) and was awarded the Trustee Scholarship and Academic Excellence Award in Computer Science.

I was previously a research intern at Microsoft Research FATE (Fairness, Accountability, Transparency, and Ethics in AI) NYC (2023) and Montréal (2022), doing research on responsible AI and the future of work. As an undergraduate research intern, I also contributed to responsible AI efforts at Microsoft Research Aether (AI Ethics and Effects in Engineering and Research). I also did computational social science research at Oxford University Centre for Technology and Global Affairs.

You can find more information in my CV.

Latest Updates

Invited talk at TU Delft

I will be giving a virtual talk on my research on designing towards positive futures for AI and work at the Academic Fringe Festival hosted by TU Delft.

November 2025

Paper forthcoming at Big Data & Society

Our paper, "AI Failure Loops in Devalued Work: The Confluence of Overconfidence in AI and Underconfidence in Worker Expertise" was accepted at Big Data & Society!

November 2025

Invited talks at Harvard & Wellesley

I will be in Boston, MA to give talks at Harvard University and Wellesley College on designing towards positive AI futures for socially complex work with and for workers.

October 2025

DC & Paper accepted at GaTech Responsible Computing Summit

I'll be in Atlanta, Georgia to participate in the Doctoral Consortium and present a poster on our work on AI in feminized labor at the 2025 Summit on Responsible Computing, AI, and Society.

October 2025

Guest Lecturer at Brown University

I will be giving a guest lecture on AI-assisted social decision-making at Brown University, hosted by Harini Suresh for her class Introductions to Sociotechnical Systems and HCI.

October 2025

Proposed Thesis

I successfully proposed my PhD research thesis, “Designing Positive AI Futures for Socially Complex Work With and For Workers.” I’m officially a PhD candidate!

September 2025

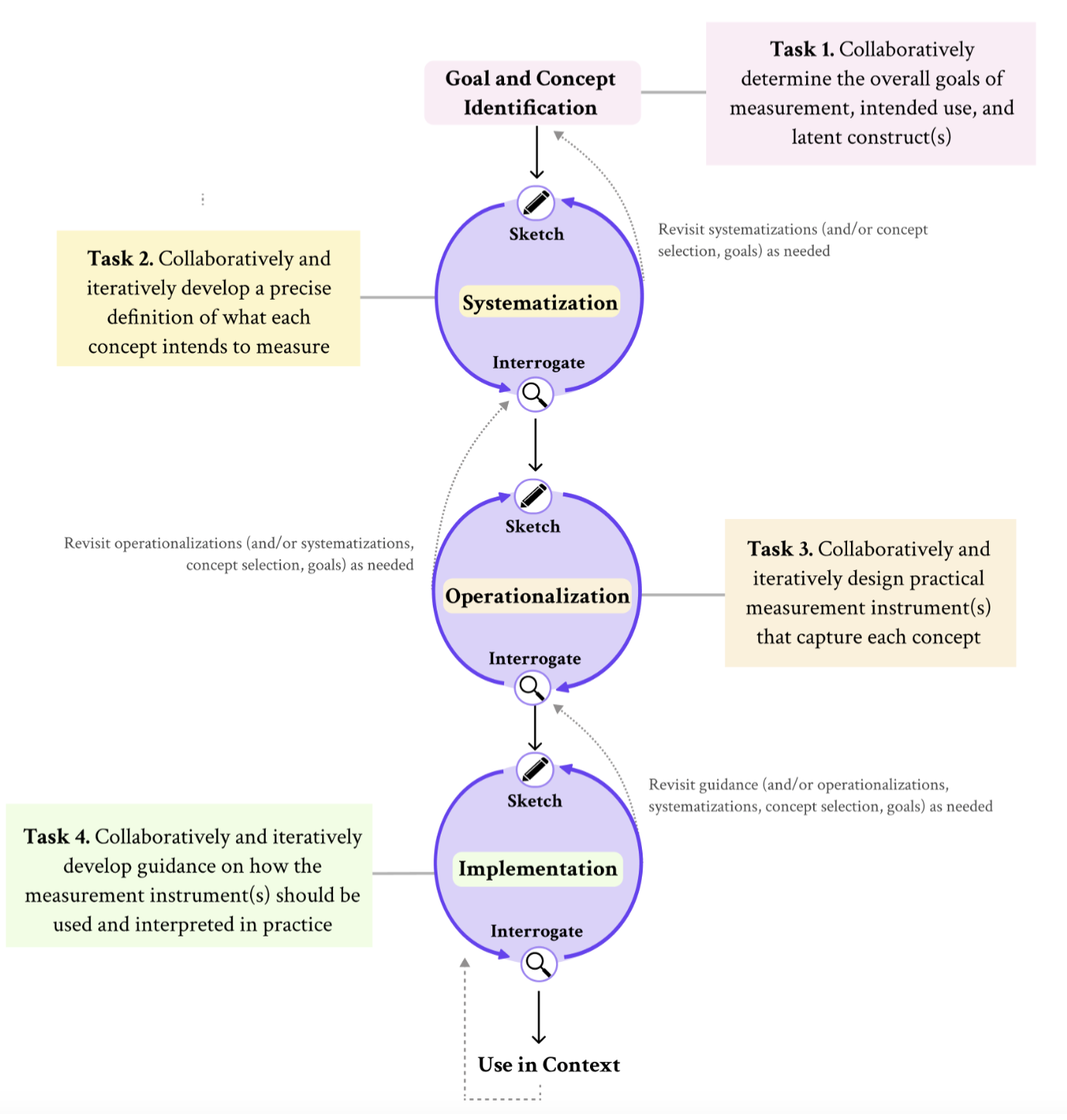

Co-organizing a FAccT 2025 tutorial

I will be in Athens, Greece to present a FAccT 2025 tutorial on supporting stakeholder participation in AI measurement. This is a joint collaboration with researchers across MSR FATE and STAC, CDT, UMSI, and Stanford.

June 2025

Invited talk at UPenn Wharton AI and the Future of Work Conference

I will be in Philadelphia, PA to give a talk on our work on the impacts of AI on feminized labor.

May 2025

Invited expert at convening on predictive analytics in child welfare

I will be attending the international expert convening on predictive analytics in child welfare to share my research expertise on the topic, hosted by University of Bristol.

March 2025

Policy memo published with the FAS

Our policy memo on expanding state and local government capacity for more responsible AI procurement practices was published by the FAS (Federation of American Scientists).

February 2025

Invited talk at Cohere for AI

I will be giving a talk on ongoing research on scaffolding AI measurement as a collaborative design practice at the Open Science Community Event, hosted by Cohere for AI.

December 2024

Invited expert at FAS policy summit

I will be in Washington, DC to attend a policy summit hosted by the FAS.

December 2024

Presenting paper & co-organizing workshop at CSCW 2024

I will be in Costa Rica to present our paper on public sector agencies' practices around AI adoption and co-organize a workshop on labor, visibility, and technology.

November 2024

Two special recognitions for outstanding reviews

I received two special recognitions for my paper reviews for the CHI conference.

November 2024

Guest Lecturer at Carnegie Mellon University

I will be giving a guest lecture on Automation and AI in the Workplace, hosted by Laura Dabbish at Carnegie Mellon University's Human Factors Course.

November 2024

Awarded Google Academic Research Award

I am grateful to be awarded the Google Academic Research Grant (co-written with my co-advisors) to fund our research on supporting AI measurement design as a collaborative design process.

October 2024

Presenting papers at AIES 2024

I will be in San Jose, California to present two papers: One that examines the efficacy of responsible AI toolkits from policy and civil society perspectives, and another on the impacts of AI on feminized labor.

October 2024

Invited expert at convening on public sector AI

I will be attending the Expert Convening on Public Sector AI to share my research expertise on the topic, hosted by Roosevelt Institute.

October 2024

Presenting a paper at CHI 2024

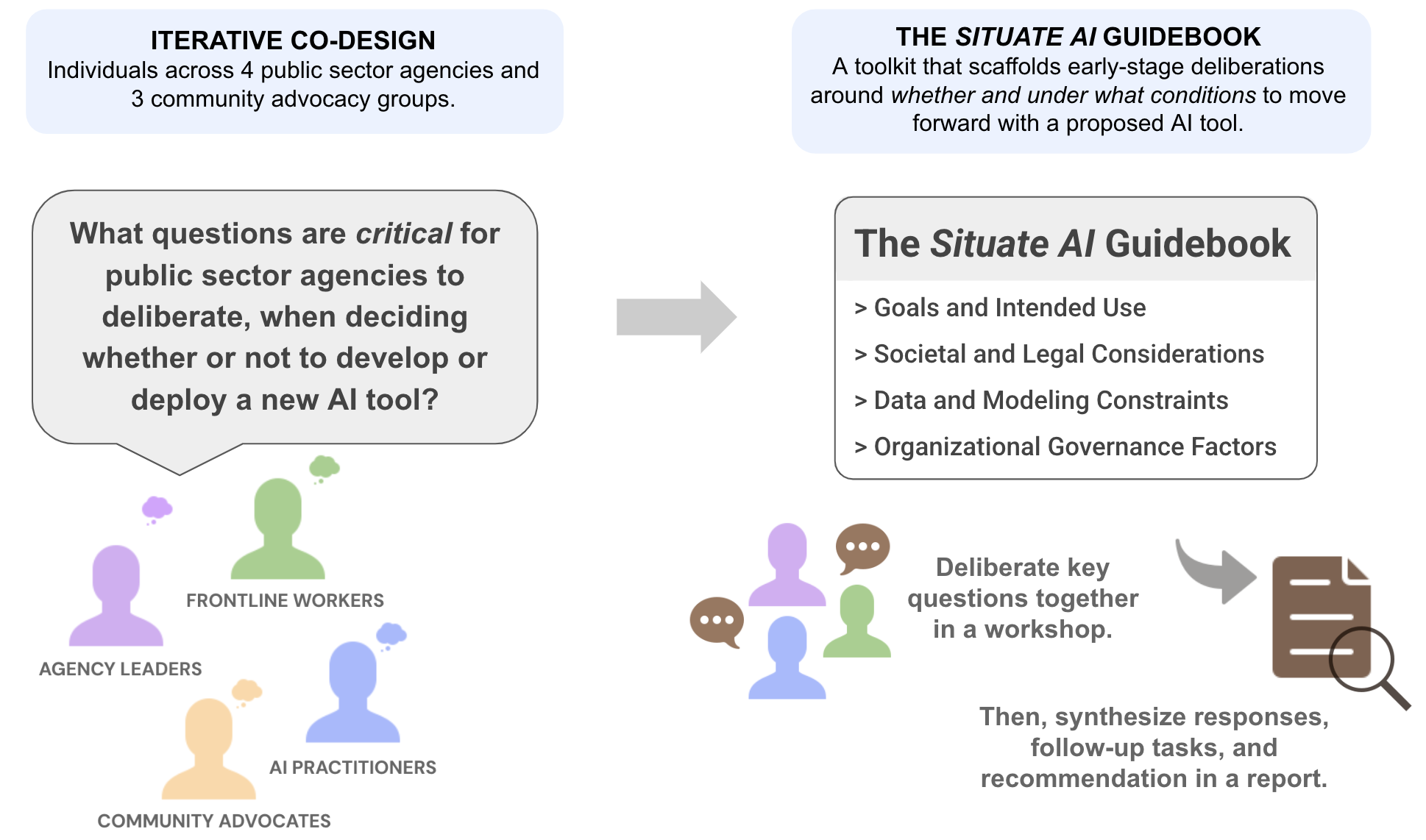

I will be in Honolulu, Hawaii to present our paper on the Situate AI Guidebook: A toolkit to scaffold early-stage multi-stakeholder deliberations around AI design concepts.

May 2024

Guest Lecturer at Carnegie Mellon University

I will be giving a guest lecture on AI in socail decision-making, hosted by Motahhare Eslami at Carnegie Mellon University's Human-AI Interaction Course.

January 2024

Guest Lecturer at Carnegie Mellon University

I will be giving a guest lecture on automation and AI in the workplace, hosted by Laura Dabbish at Carnegie Mellon University's Human Factors Course.

November 2023

Presenting a paper at ACM Collective Intelligence 2023

I will be in Delft, Netherlands to present our paper on supporting worker training on AI-assisted decision-making at ACM Collective Intelligence / HCOMP.

November 2023

Presenting a paper at EAAMO 2023

I will be in Boston, MA to give a talk on our paper on understanding public sector agencies' AI adoption practices and challenges at EAAMO 2023.

October 2023

Invited talk at Wellesley College

I will be in Boston, MA to give a talk on supporting responsible AI practices in public sector contexts, hosted by my former advisor Eni Mustafaraj at Wellesley College.

October 2023

Presenting paper & co-organizing workshop at CSCW 2023

Looking forward to attending CSCW 2023 in Minneapolis to present my internship work and co-organize our workshop on community-driven AI.

October 2023

Completed MSR internship

I had a wonderful summer interning with Microsoft Research FATE in NYC, where I was fortunate to be advised by Alexandra Chouldechova and Daricia Wilkinson.

September 2023

Awarded the NSF GRFP

I am honored to be awarded the NSF Graduate Research Fellowship.

March 2023

Won a Best Paper Award

Our paper that translates validity concepts to examine data-driven decision-making algorithms won the best paper award at SaTML 2023.

February 2023

Paper presentation at SaTML 2023

I will be at Raleigh, North Carolina to present our paper at SaTML (Secure and Trustworthy Machine Learning) Conference.

February 2023

Co-organizing a workshop at CSCW 2022

I am virtually attending CSCW 2022 to co-organize our workshop on collaborating with local government agencies to do research on public interest AI.

November 2022

Awarded the K&L Gates Ethics and AI Presidential Fellowship

I am grateful to be one of three PhD students at CMU to be awarded the K&L Gates fellowship to fund research on ethics and computational science and technology.

November 2022

Invited talk at INFORMS 2022

I will be in Indianapolis, IN to give a joint talk with Luke Guerdan at INFORMS (The Institute for Operations Research and the Management Sciences) on Understanding Human-AI Decision-making in the Real-world: From Observational Studies to Theoretical Models.

October 2022

Invited expert at Augmented Intelligence at Work Symposium

I will be in Hanover, Germany as one of 50 researchers invited to attend the International Symposium of Augmented Intelligence, hosted by the University of Cambridge, the RWTH Aachen University, and the Volkswagen Stiftung.

October 2022

Completed MSR internship

I had a fantastic summer interning with Microsoft Research FATE in Montréal (remote), where I was fortunate to be advised by Koustuv Saha, Vera Liao, Alexandra Olteanu, Jina Suh, and Shamsi Iqbal.

August 2022

Presenting a paper at DIS 2022

I will be virtually presenting our paper on exploring worker-AI interface design concepts for AI-assisted social decision-making at DIS 2022.

June 2022

Presenting a paper at CHI 2022

I will be at New Orleans, LA to present our CHI 2022 paper on understanding social workers' practices and challenges making AI-assisted child maltreatment screening decisions.

April 2022

Won a Best Paper Honorable Mention Award

Our CHI 2022 paper won the best paper honorable mention award.

April 2022

Started PhD

I started my PhD at CMU HCII!

September 2021

Select Research Themes

You can find a full list of publications here.

WORKER-AI INTERACTION

Designing Towards Worker-AI Complementarity

Improving Human-AI Partnerships in Child Welfare: Understanding Worker Practices, Challenges, and Desires for Algorithmic Decision Support

Anna Kawakami, Venkatesh Sivaraman, Hao-Fei Cheng, Logan Stapleton, Yanghuidi Cheng, Diana Qing, Adam Perer, Zhiwei Steven Wu, Haiyi Zhu, and Kenneth Holstein.

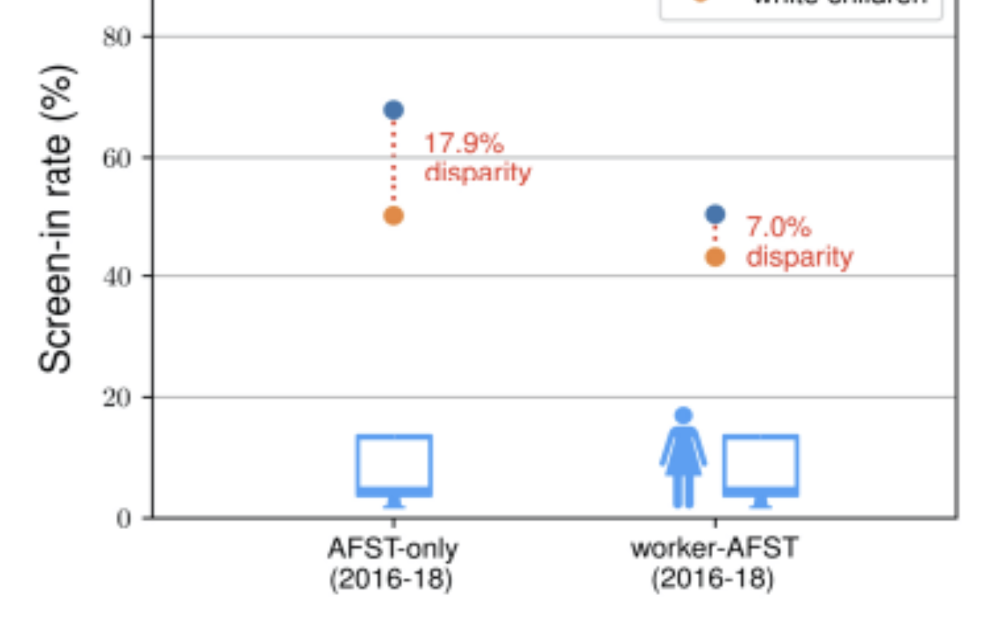

How Child Welfare Workers Reduce Racial Disparities in Algorithmic Decisions

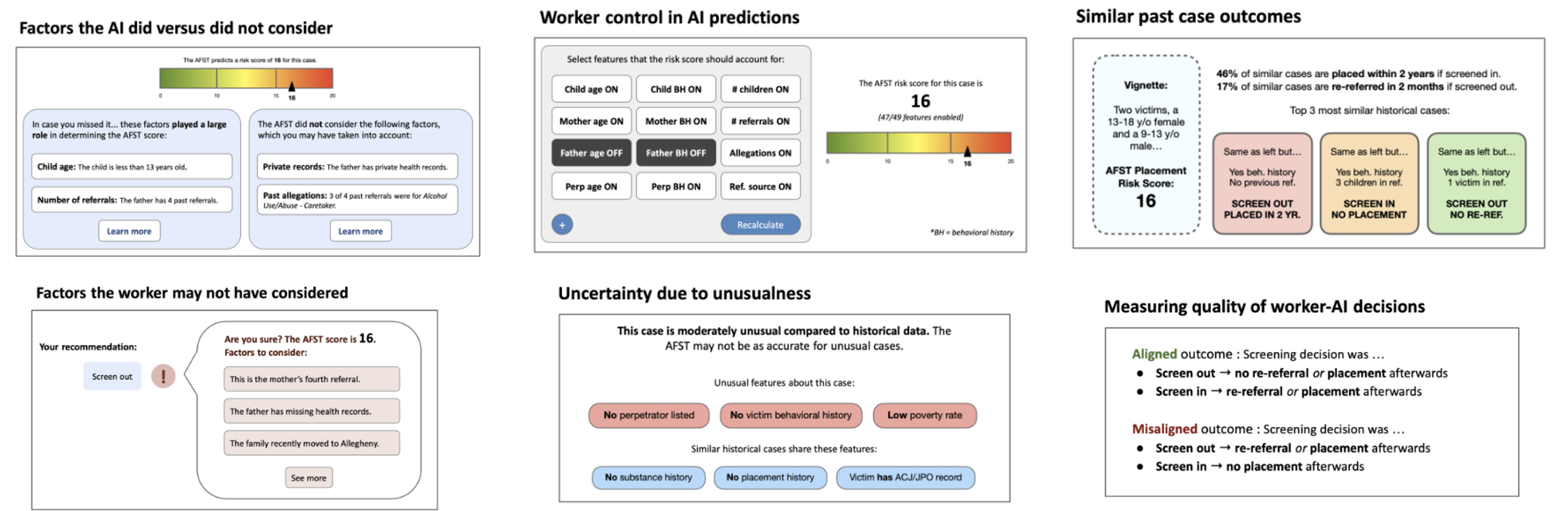

“Why Do I Care What’s Similar?” Probing Challenges in AI-Assisted Child Welfare Decision-Making through Worker-AI Interface Design Concepts

Training Towards Critical Use: Learning to Situate AI Predictions Relative to Human Knowledge

ORGANIZATION-AI INTERACTION

Improving AI Governance in the Public Sector

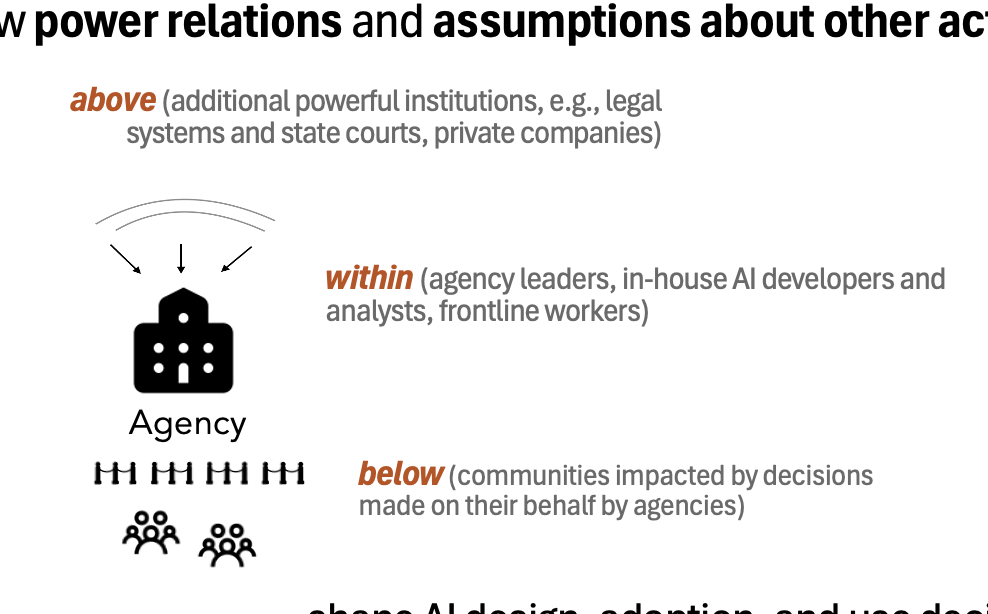

Studying Up Public Sector AI: How Networks of Power Relations Shape Agency Decisions Around AI Design and Use

The Situate AI Guidebook: Co-designing a Toolkit to Support Multi-stakeholder, Early-stage Deliberations Around Public Sector AI Proposals

A Federal Center of Excellence to Expand State and Local Government Capacity for AI Procurement and Use

SOCIETY-AI INTERACTION

Re-envisioning Measurement of Worker and AI Capabilities

AI Failure Loops: The Confluence of Overconfidence in AI and Underconfidence in Worker Expertise

AI Measurement as a Collaborative Design Practice: A Multidisciplinary Framework and Research Agenda

Translation Tutorial: AI Measurement as a Stakeholder-Engaged Design Practice

Resources

Resources others have created that I found helpful when applying to grad school. All credits go to their respective owners.- For applying to grad school: Emma Lurie’s Aspirational Grad School Timeline.

I didn’t follow this exactly but it was a good frame of reference. Also many HCI / I-School and CS PhD programs removed the GRE requirement in the past year. It could make sense to make a list of programs you'd definitely be excited to attend before studying for the GRE (maybe you'll find you don't need to take it, like me!).

- For navigating undergraduate research: Emma Lurie's Tips for Working with an Undergraduate Research Advisor.

Every lab is different but this guide is spot-on for Eni's Wellesley Cred Lab. I like to read through the common scenarios for validation.

- For finding potential advisors: Eric Gilbert’s Syllabus for Eric's PhD students.

I especially like the sections on Ideas and Doing research. He also links an awesome PhD meeting agenda template that I ~aspire~ to follow.

- For getting feedback on application materials: Jia-Bin Huang's Twitter thread on student-led pre-application programs for supporting PhD students.

If you're interested in Carnegie Mellon's HCI PhD program (or any other CS-related PhD program at CMU), you could get feedback on your application materials from a wonderful team of PhD student volunteers: Graduate Application Support Program.

If you think there’s anything I can help with, please don't hesitate to email me directly!